What is Databricks: An introduction guide to the platform

In today's data-driven world, organizations are constantly seeking robust, scalable, and efficient platforms to handle vast amounts of data and derive actionable insights. With a market share of 15.89% in the big-data-analytics market, Databricks data analytics platform combines the best of data engineering, data science, and machine learning in a single platform.

Let's get to know Databricks in detail, exploring its core features, architecture, and reasons for choosing it over competitors.

What is Databricks?

Databricks is a unified, open analytics platform designed to build, deploy, and maintain enterprise-grade data, analytics, and AI solutions at scale. Databricks also has a Data Intelligence Platform that integrates with cloud storage and security, so it can manage and deploy cloud infrastructure on your behalf.

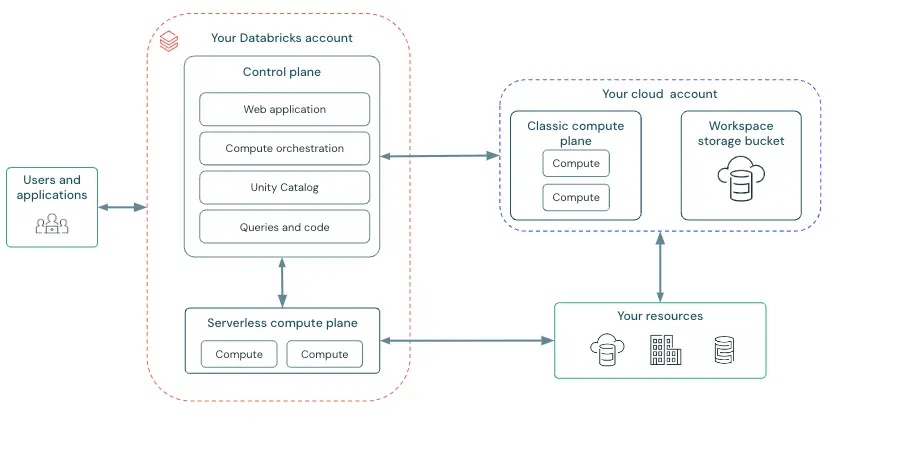

Databricks architecture overview

Databricks efficiently operates with large data volumes because of its cloud-native architecture. Being built on top of Apache Spark – an open-source distributed computing system – Databricks supports processing of large-scale data analytics and machine learning workloads.

Components of the Databricks architecture:

- Control plane: houses Databricks' back-end services, including the graphical interface and REST APIs used for account administration and workspaces.

- Data plane (now known as Compute Plane): needed for external/client communications and data processing..

Although it's common to use the customer's cloud account for both data storage and data plane, Databricks also supports the architecture where the data plane lives in their cloud, and the account is used for data storage.

Databricks: 10 key features

Databricks provides a powerful, flexible, and efficient platform for modern data analytics needs. It suits small businesses and large enterprises working with massive data volumes, making data management and machine learning easy and scalable.

1. Unified data platform

Databricks integrates data engineering, data science, and machine learning on a single platform. This unification simplifies data workflows and promotes collaboration.

2. Access control

Databricks has advanced access control methods to prevent unauthorized access. Role-based access control (RBAC) and fine-grained access features allow companies to limit user rights and better manage data access.

Teams can govern access rights to objects like folders, notebooks, experiments, clusters, pools, alerts, dashboards, queries, and more.

3. Scalability

Databricks' architecture allows for scalable computing resources. Therefore, it allows businesses to easily handle any workload size by easily scaling up or down per request.

4. High performance

With Apache Spark's in-memory processing capabilities, Darabricks delivers high-performance analytics and machine learning. This ensures fast data processing and real-time analytics.

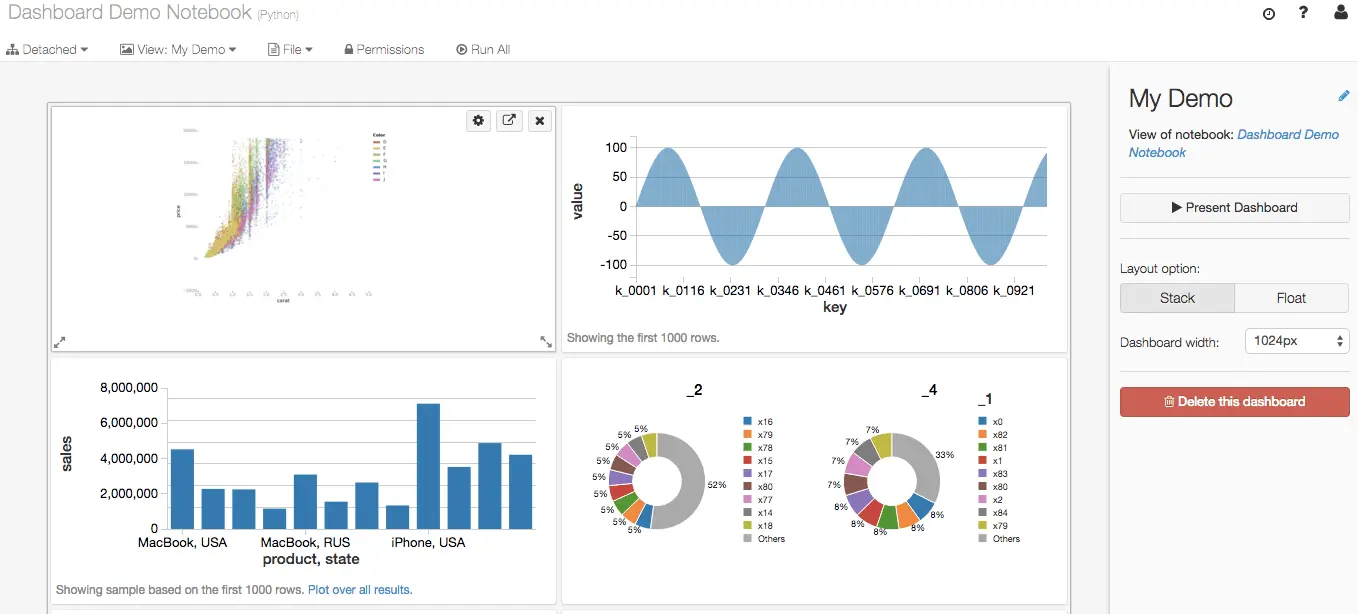

5. Collaborative notebooks

Databricks has interactive notebooks supporting multiple languages, including SQL, Python, R, and Scala. In Databricks, notebooks are the key tool for creating data science and machine learning workflows and collaborating with colleagues.With real-time coauthoring in multiple languages, notebooks also offer automatic versioning and built-in data visualizations.

6. Integrated Machine Learning (ML)

Databricks offers integrated tools for machine learning, including automated machine learning (AutoML), MLflow for tracking experiments, and model management.

7. Data governance

Data governance is a set of rules and processes to safely manage its data assets. As a key element of lakehouse, centralized data governance combines data warehousing and AI use cases within a single platform.

Tools like data governance, such as audits and compliance controls, allow enterprises to track and monitor data access, manage the data lifecycle, and meet all their legal obligations or compliance goals.

Databricks ecosystem & tools

Databricks' robust ecosystem includes various tools and integrations designed to optimize the user experience and enhance functionality.

- UI Bakery: it is a low-code platform that can be integrated with Databricks.

This tool creates a solution for your case on top of your database. Whether you need to build an admin panel, a customer support portal or dashboards, UI Bakery is a Databricks GUI tool for data visualization, datasets management and executing SQL queries.

- MLflow: its a service built on the open source MLflow platform and developed by Databricks. It was designed to manage the entire machine learning lifecycle with reliability, security, and scalability required for large projects.

- Delta Lake: is the optimized storage layer acting as the foundation for tables in a lakehouse on Databricks. Data Lake allows users to easily use a single copy of data for both batch and streaming operations which enables incremental processing at scale.

- Azure Databricks: Deep integration with Microsoft Azure for cloud-based data analytics.

- AWS Databricks: Optimized version of Databricks for Amazon Web Services.

Databricks: pricing breakdown

Databricks offers per-second billing for a pay-as-you-go model. Therefore, it doesn't require upfront costs or recurring contracts - you pay for using the platform's resources:

- Compute costs: cost of virtual machines (VMs) used for processing data. Databricks will charge based on the type and number of VMs used, providing different pricing tiers for different VM sizes.

- Storage costs: charged based on the actual amount of data stored in the cloud. Databricks charges for both managed and external storage options.

- Additional services: additional services, such as support, training, and consulting, can be added based on user requirements.

Reasons to choose Databricks over competitors

Databricks is one of the first in the list of best data analytics platforms, competing with Snowflake. Here are some reasons for choosing Databricks over other tools:

1. Cost-efficient pricing model

- The pay-as-you-go-based pricing model ensures you only pay for resources you use.

- Databricks' separation of computing and storage enables precise cost management and optimization, avoiding unnecessary expenditure.

2. Unified analytics platform

- It combines the best data lakes and data warehouses with Delta Lake, providing reliable data storage and management.

- Supports a wide range of data processing workloads, like batch, streaming, and machine learning, on a single platform.

3. Scalability and performance

- Can automatically scale computing resources up or down according to your workload demands, ensuring efficient use of resources.

- Optimized for performance with features like auto-optimizing tables and advanced caching mechanisms.

4. Robust security and compliance

- Packed with enterprise-grade security features, such as role-based access control (RBAC) and encryption at rest and in transit, ensuring compliance with industry standards.

- Provides comprehensive data governance tools to manage data access, quality, and lineage.

5. Extensive ecosystem integration

- Supports integration with a wide range of data sources, GUI tools like UI Bakry, BI tools, and cloud services, providing flexibility in building end-to-end data pipelines.

- Offers APIs and connectors for seamless integration with third-party tools and services.

Let's wrap it up

Databricks offers unparalleled flexibility, performance, and ease of use. With its cloud-native architecture, Databricks also couples with innovative features like Delta Lake and collaborative notebooks. Everything combined makes Databricks a powerful choice for modern data management and analytics needs.