Snowflake vs. Databricks: A Detailed Comparison Guide 2025

With the key goal of enabling data-driven decisions through intelligence they extract from the data collected, traditional data warehouses have become insufficient to cope with increased data velocity, volume, and veracity.

Although the market offers a range of services as a more advanced replacement for data warehouses, today we are going to discuss the leading two – Snowflake and Databricks.

Databricks is a unified, open analytics platform that combines data warehouses and data lakes in a single place. At the same time, Snowflake offers a data warehouse with a software-as-a-service (SaaS) that means less maintenance and higher scalability.

Let's explore each tool in detail and compare them based on key features.

Snowflake vs Databrick platforms overview

Before moving to their differences, let's first get to know the tools separately.

Snowflake

Snowflake, as a cloud-based data warehousing platform, acts as a fully managed solution for storing and analyzing large data volumes.

Core features:

- Near-zero management;

- Scalability;

- Data sharing;

- Data caching;

- Snowpark;

- Micro-partitioned data storage;

- Security.

Key use cases:

- Advertising, media, and entertainment: enhances audience analytics, boosting engagement and lifetime value with personalized experiences.

- Financial services: offers a complete customer view across business lines, reduces costs, and improves risk management and regulatory compliance with centralized data for trade surveillance and fraud detection.

- Healthcare & life sciences: builds comprehensive patient and member profiles by combining clinical, claims, consumer, and socio-economic data.

- Public sector: offers inter-agency data sharing, allowing governments to collaborate while controlling sensitive data.

- Retail & consumer goods: enhance customer profiles and personalize experiences.

- Technology: enable data consolidation across their stacks, creating a single source of truth.

- Manufacturing: allows integration of IT and OT data, enabling smart manufacturing and data-driven decisions.

Pros:

- Fully managed service: as a fully managed service, Snowflake has less overhead in terms of infrastructure management.

- Ease of use: user-friendly interface with minimal database administration required.

- Scalability: Snowflake is highly scalable with its separate compute and storage, automatically scaling based on workload.

- High performance: the service offers automatic query optimization and support for concurrent workloads.

- Data sharing: secure and easy data sharing capabilities across different organizations and cloud platforms.

Cons:

- Limited machine learning capabilities: lacks native machine learning tools, requiring integration with other platforms.

- Vendor lock-In: proprietary platform which might lead to vendor lock-in.

- Complexity in data transformation: Snowflake’s data transformation process can be more complex when compared to other platforms.

- Third-party tool dependency: usually requires integrations with third-party tools for advanced analytics and machine learning capabilities.

Databricks

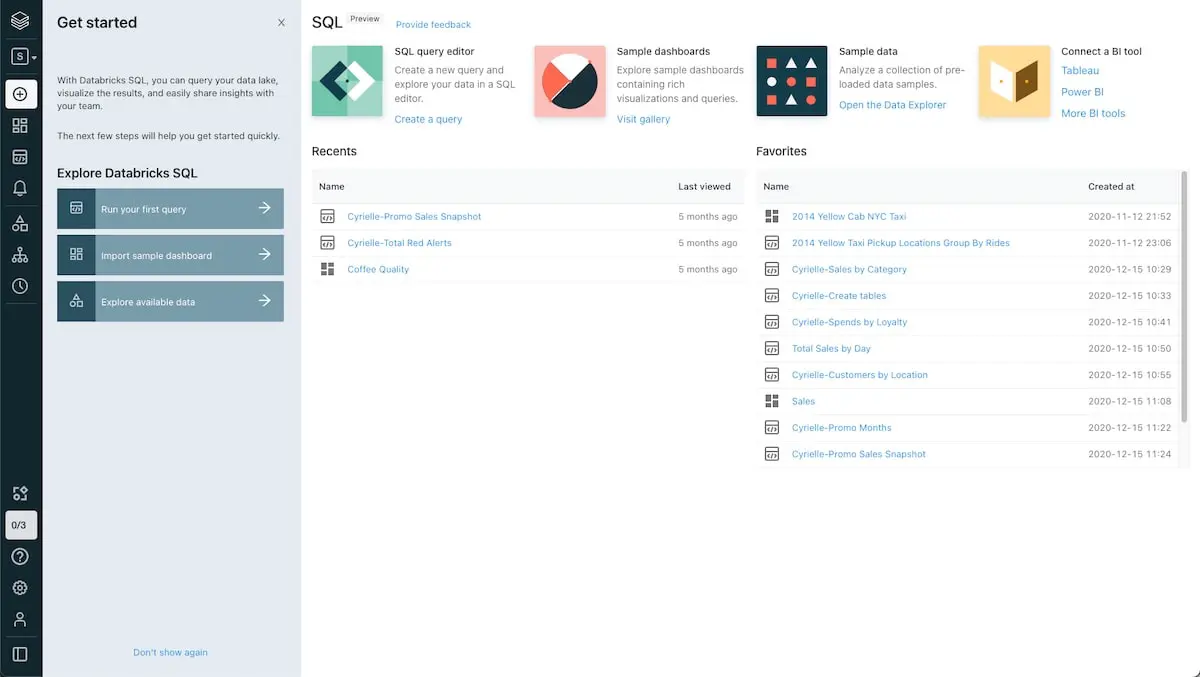

Databricks is a unified, open analytics platform for developing, deploying, sharing, and managing enterprise-grade data, analytics, and AI solutions at scale. The service integrates with cloud storage and security within your cloud account, so it manages and deploys cloud infrastructure on your behalf.

Core features:

- Unified analytics platform;

- Collaborative Notebooks;

- Integrated data management;

- Machine learning;

- Real-time data processing;

- Advanced analytics;

- Cloud integration;

- Interactive dashboards.

Key use cases:

- Energy and utilities: integrates and analyzes IoT data from smart meters and sensors, enhancing predictive maintenance and operational efficiency.

- Telecommunications: analyzing large volumes of network data, improving churn prediction, and personalizing services through advanced analytics.

- Education: supports personalized learning experiences by analyzing student performance data, identifying trends, and enabling data-driven decisions.

- Travel and hospitality: analyzes customer preferences and behaviors, optimizes pricing strategies, and improves operational efficiency through predictive maintenance.

- Logistics and transportation: improves delivery times and reduces operational costs through predictive analytics.

- Pharmaceuticals: accelerates drug discovery and development by integrating and analyzing diverse data sources, including clinical trials, genomics, and real-world evidence.

- E-commerce: analyzes customer behavior and preferences, optimizes inventory management, and improves fraud detection through advanced analytics.

- Insurance: improves risk assessment and fraud detection by integrating and analyzing claims, customer, and external data.

Pros:

- Advanced analytics and machine learning: built-in support for machine learning and advanced real-time analytics.

- Collaborative environment: collaborative notebooks for real-time code sharing and support for multiple languages (Python, R, Scala, SQL).

- Highly scalable with optimized performance: great for big data workloads, including auto-scaling.

- Integration with Apache Spark: useful for big data processing.

- Flexibility: supports multiple cloud platforms and on-premises deployments.

Cons:

- Complex setup and management: usually requires longer setup and management compared to Snowflake.

- Steeper learning curve: tend to be complex for those not familiar with big data and Spark environments.

- Data storage cost: may incur higher storage costs compared to other platforms.

Architecture and design

Snowflake

- Cloud-native design offers a fully managed, scalable, and resilient service. It ensures high availability with data replication across multiple regions.

- Separation of computing and storage allows for independent scaling, optimal performance, and cost efficiency.

- Multi-cluster architecture enables independent compute clusters to operate concurrently, enhancing concurrency and workload isolation.

Databricks

- Lakehouse architecture combines the scalability of data lakes with the performance of data warehouses, managing structured and unstructured data in a unified system.

- Integration with Apache Spark enables high-performance data processing and supports complex data transformations and machine-learning workflows.

- Collaborative workspace offers interactive notebooks, version control, and integrated workflows, fostering collaboration among data engineers, scientists, and analysts.

Data processing capabilities

Snowflake

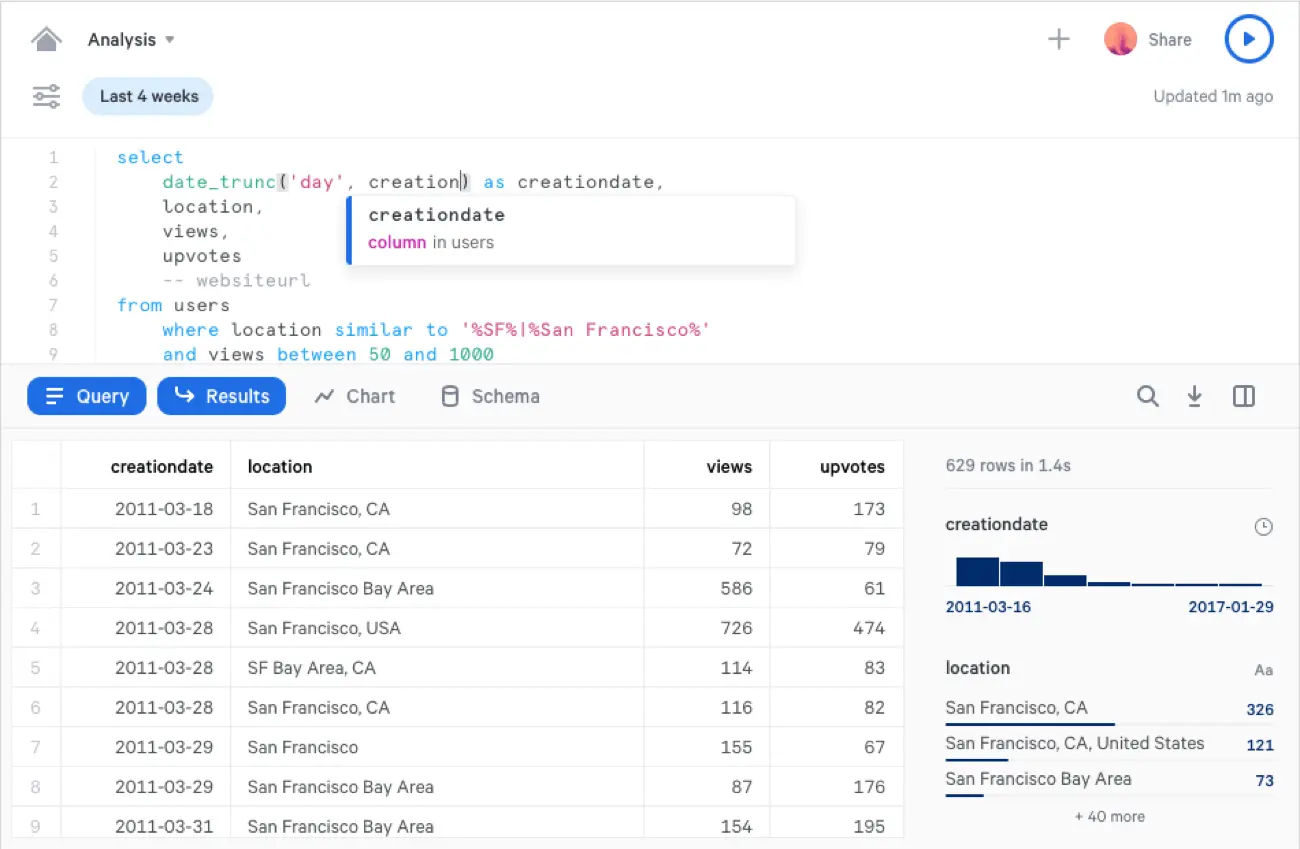

- SQL-based processing is designed to handle SQL-based processing, allowing users to run complex queries, perform data transformations, and manage data with SQL.

- Manages diverse workloads, such as data warehousing, data lakes, data sharing, and data science.

- It offers high-performance query execution and performance with its unique architecture, separating computing and storage.

Databricks

- Uses Apache Spark-based processing that enables large-scale data analytics and data engineering tasks.

- Supports batch and stream processing, allowing users to handle real-time data streams and historical batch data with ease.

- Integrates machine learning and graph processing to facilitate advanced analytics and machine learning tasks.

Data storage solutions

Snowflake

Snowflake manages all aspects of data storage, from organization, file size, to metadata; the service stores this optimized, compressed data in a needed format and in cloud storage. The data objects stored in a Snowflake are not visible and accessible by customers – data is accessible via SQL query operations run using Snowflake.

Snowflake supports:

- Numerical;

- String;

- Logical;

- Date & time;

- Semi-structured;

- Unstructured data types.

Databricks

Databricks provides a robust data storage solution through its Delta Lake – an open-source storage layer bringing reliability to data lakes. Databricks supports ACID transactions and scalable metadata handling, and it unifies streaming and batch data processing. Databricks supports a wide range of data structures and formats to cater to various data management needs. These include:

- Traditional relational data with predefined schemas.

- JSON, XML, Avro, and Parquet.

- Text files, images, videos, and binary data.

- Arrays, maps, and structs, enabling complex data manipulations and transformations.

Security and compliance

Snowflake

- Access control regulates who can interact with database objects, combining elements of both Discretionary Access Control (DAC) and Role-based Access Control (RBAC).

- Encryption and data protection: all data within Snowflake is encrypted by default using the latest security standards,like strong AES 256-bit encryption with a hierarchical key model rooted in a hardware security module.

- Compliance standards: ISO/IEC 27001, GDPR, CCPA, HIPAA, HITRUST, SOC 1 and 2 Type II, PCI DSS, and FedRAMP to name a few.

Databricks

- Access control: Allows the use of access control lists (ACLs) where admins are able to manage permissions on all objects in their workspace.

- Encryption and data protection: supports encrypting data in S3 through server-side encryption.

- Compliance standards: HIPAA, Infosec Registered Assessors Program (IRAP), PCI-DSS, FedRAMP High, FedRAMP Moderate.

Ecosystem and integrations

Snowflake

Data integration tools:

- It offers JDBC, ODBC, Python, Spark, and Node.js connectors for integration with various applications and services.

- CVan integrates with platforms like Fivetran, Stitch, and Segment.

Business Intelligence (BI) and analytics tools:

- Native connectors for BI tools like Tableau, Power BI, Looker, and Qlik.

- Can integrate with platforms like DataRobot, H2O.ai, and custom machine learning workflows using Snowflake's Python and Spark connectors.

Data sharing with external partners:

- Cloud providers like AWS, Azure, and GCP use Snowflake's multi-cloud capabilities.

GUI tools:

- UI Bakery for Snowflake dashboard and Snowflake Admin to build internal apps and visualize your data all in one dashboard.

Databricks

ETL and data integration tools:

- Apache Spark for powerful distributed data processing.

- Can integrate with tools like Informatica, Talend, Fivetran, and Matillion for ETL workflows.

APIs and SDKs:

- Allows access to Databricks features programmatically using REST APIs for managing clusters, jobs, and data.

Data sharing options:

- Delta Sharing allows secure and scalable sharing of live data from Delta Lake tables with other Databricks users or external partners.

GUI tools:

- UI Bakery for Databricks to build internal apps and visualize your data workflows.

Cost and pricing models

Snowflake

Snowflake offers a free trial. Aside from that, it has four pricing models, including:

- Standard: $2 per credit ( a unit that measures usage. It is used when a customer uses resources, like running a virtual warehouse or performing tasks in the cloud services layer);

- Enterprise: $3 per credit;

- Business: $4 per credit;

- Virtual private Snowflake: pricing can be requested on a website.

Snowflake is generally cheaper for: data warehousing, ETL operations, and scalable query processing due to its efficient storage and query optimization, cost-effective SQL-based transformations, and pay-as-you-go compute resources.

Databricks

Databricks has a free trial. It has a pretty complex structure where users are charged based on the resources they consume on the platform. The key element of this pricing model is the cost of clusters.

The platform provides several tiers of clusters, from a small single node to a large multi-node cluster. Here are some pricing examples:

- All-purpose compute: Standard costs $0.40 per DBU/hour, Premium costs $0.55 per DBU/hour.

- Jobs compute: Standard costs $0.15 per DBU/hour, Premium costs $0.30 per DBU/hour.

Databricks is more cost-effective for: big data processing, complex data engineering, real-time analytics, and machine learning because of its distributed computing capabilities, robust data engineering tools, real-time data handling, and integrated machine learning environments.

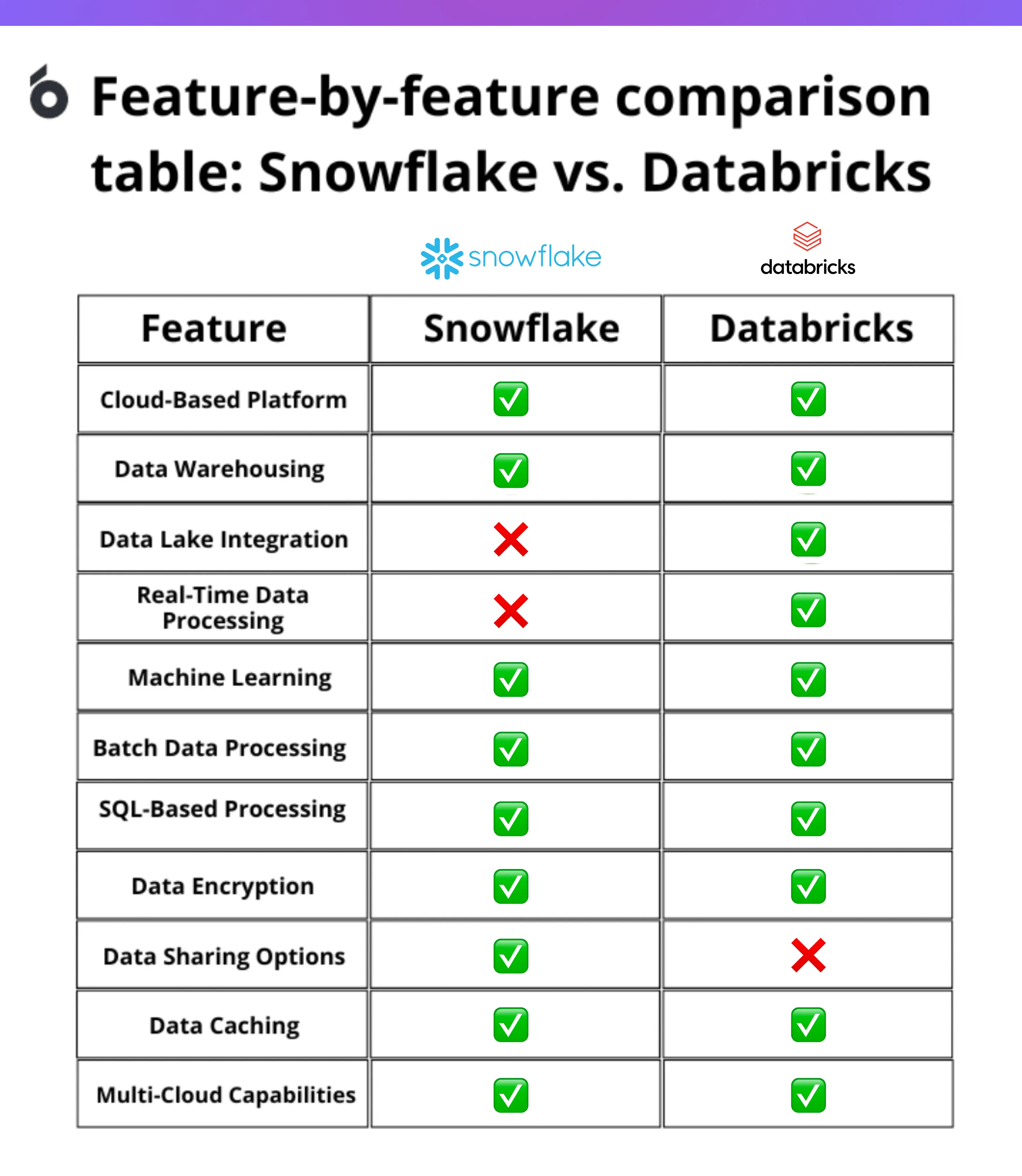

Feature-by-feature comparison table: Snowflake vs. Databricks

Last thoughts

Both Snowflake and Databricks offer robust solutions for data management but cater to different needs.

Choosing between these two will depend on your specific requirements and budget.

Snowflake is best for those seeking a fully managed, cloud-based data warehousing solution with strong security, scalability, and ease of use. It seems to better fit industries like finance and healthcare, which require minimal maintenance and straightforward integration.

Databricks excels with its unified platform that integrates data lakes and warehouses, real-time processing, and advanced machine learning capabilities. It will be best for sectors such as energy and telecommunications needing complex analytics and real-time insights.